In this post, we will cover how to setup two node high availability Apache cluster using pacemaker on RHEL 9/8

Pacemaker is a High Availability cluster Software for Linux like Operating System. Pacemaker is known as ‘Cluster Resource Manager ‘, It provides maximum availability of the cluster resources by doing fail over of resources between the cluster nodes. Pacemaker use corosync for heartbeat and internal communication among cluster components, Corosync also take care of Quorum in cluster.

Prerequisites

Before we begin, make sure you have the following:

- Two RHEL 9/8 servers

- Red Hat Subscription or Locally Configured Repositories

- SSH access to both servers

- Root or sudo privileges

- Internet connectivity

Lab Details:

- Server 1: node1.example.com (192.168.1.6)

- Server 2: node2.exaple.com (192.168.1.7)

- VIP: 192.168.1.81

- Shared Disk: /dev/sdb (2GB)

Without any further delay, let’s deep dive into the steps,

1) Update /etc/hosts file

Add the following entries in /etc/hosts file on both the nodes,

192.168.1.6 node1.example.com 192.168.1.7 node2.example.com

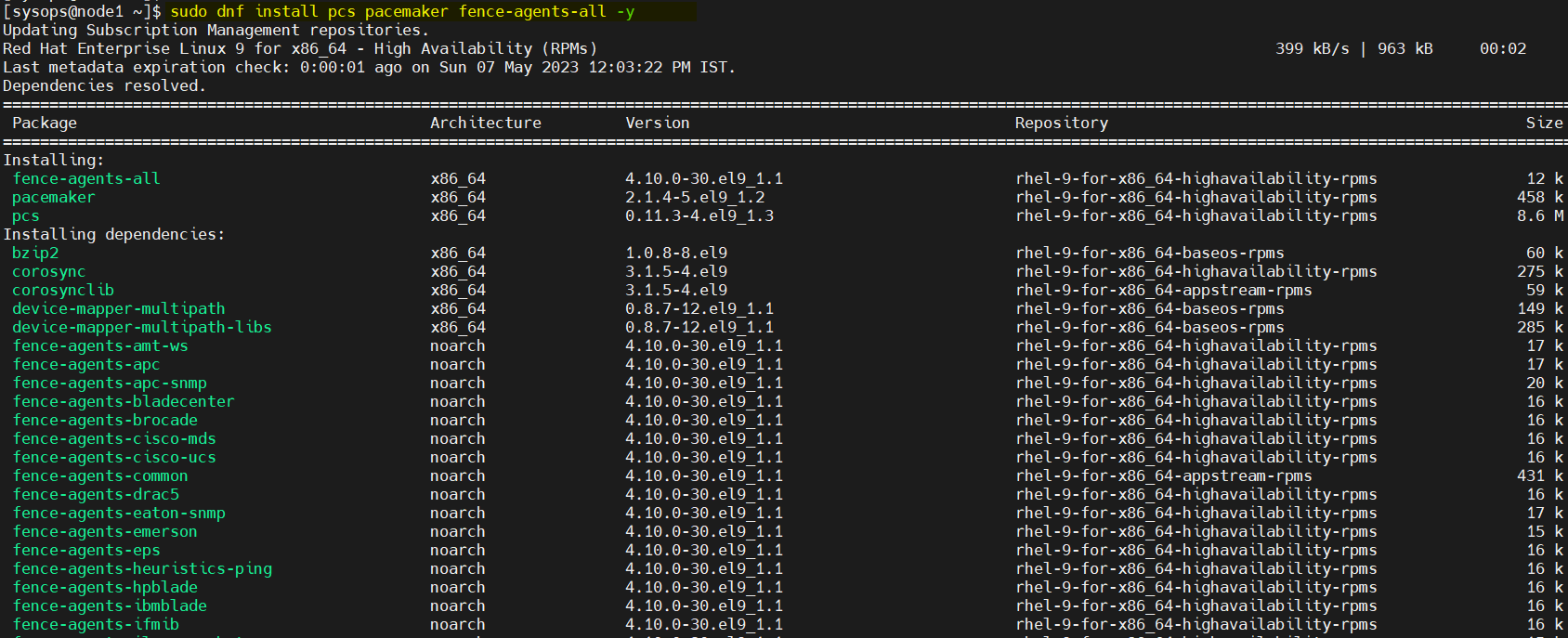

2) Install high availability (pacemaker) package

Pacemaker and other required packages are not available in default packages repositories of RHEL 9/8. So, we must enable high availability repository. Run following subscription manager command on both nodes.

For RHEL 9 Servers

$ sudo subscription-manager repos --enable=rhel-9-for-x86_64-highavailability-rpms

For RHEL 8 Servers

$ sudo subscription-manager repos --enable=rhel-8-for-x86_64-highavailability-rpms

After enabling the repository, run beneath command to install pacemaker packages on both the nodes,

$ sudo dnf install pcs pacemaker fence-agents-all -y

3) Allow high availability ports in firewall

To allow high availability ports in the firewall, run beneath commands on each node,

$ sudo firewall-cmd --permanent --add-service=high-availability $ sudo firewall-cmd --reload

4) Set password to hacluster and start pcsd service

Set password to hacluster user on both servers, run following echo command

$ echo "<Enter-Password>" | sudo passwd --stdin hacluster

Execute the following command to start and enable cluster service on both servers

$ sudo systemctl start pcsd.service $ sudo systemctl enable pcsd.service

5) Create high availability cluster

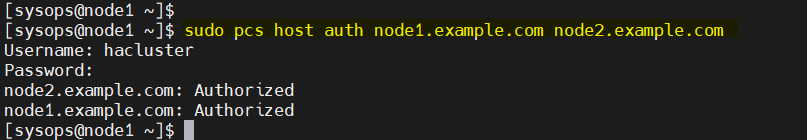

Authenticate both the nodes using pcs command, run the below command from any node. In my case I am running it on node1,

$ sudo pcs host auth node1.example.com node2.example.com

Use hacluster user to authenticate,

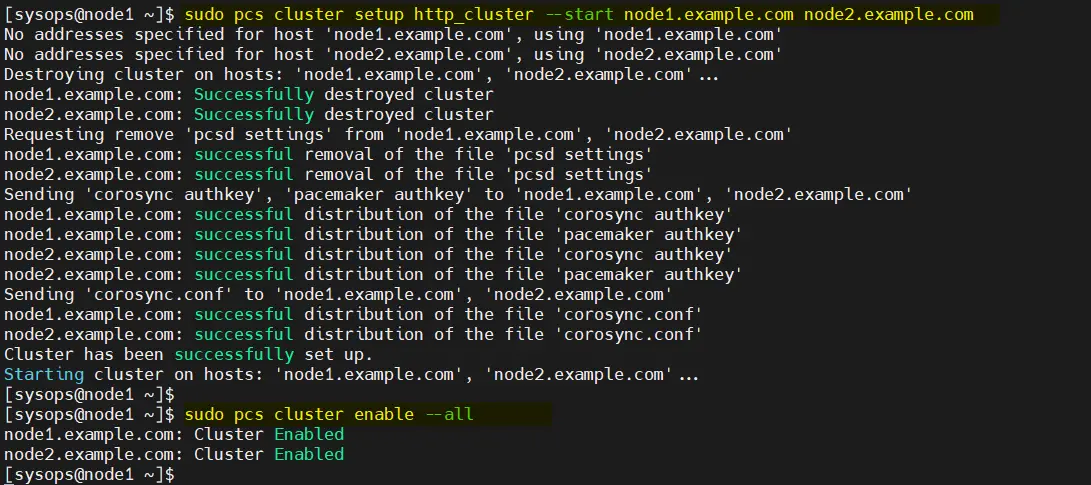

Add both nodes to the cluster using following “pcs cluster setup” command , here I am using the cluster name as http_cluster . Run beneath commands only on node1,

$ sudo pcs cluster setup http_cluster --start node1.example.com node2.example.com $ sudo pcs cluster enable --all

Output of both the commands would look like below,

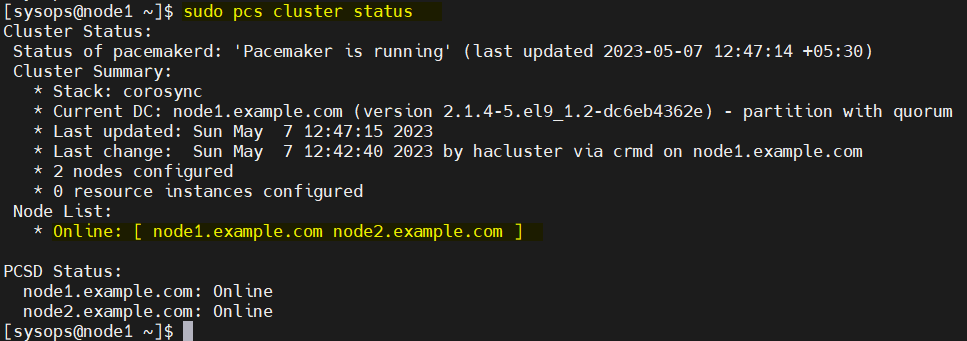

Verify initial cluster status from any node,

$ sudo pcs cluster status

Note : In our lab, we don’t have any fencing device, so we are disabling it. But in production environment it is highly recommended to configure the fencing.

$ sudo pcs property set stonith-enabled=false $ sudo pcs property set no-quorum-policy=ignore

6) Configure shared Volume for the cluster

On the servers, a shared disk (/dev/sdb) of size 2GB is attached. So, we will configure it as LVM volume and format it as xfs file system.

Before start creating lvm volume, edit /etc/lvm/lvm.conf file on both the nodes.

Change the parameter “# system_id_source = “none”” to system_id_source = “uname”

$ sudo sed -i 's/# system_id_source = "none"/ system_id_source = "uname"/g' /etc/lvm/lvm.conf

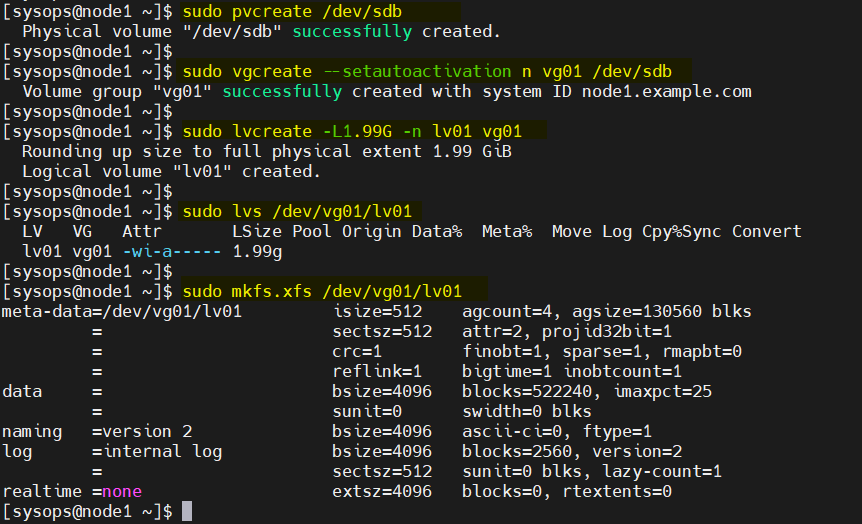

Execute following set of commands one after the another on node1 to create lvm volume

$ sudo pvcreate /dev/sdb $ sudo vgcreate --setautoactivation n vg01 /dev/sdb $ sudo lvcreate -L1.99G -n lv01 vg01 $ sudo lvs /dev/vg01/lv01 $ sudo mkfs.xfs /dev/vg01/lv01

Add the shared device to the LVM devices file on the second node (node2.example.com) of the cluster, run below command on node2 only,

[sysops@node2 ~]$ sudo lvmdevices --adddev /dev/sdb [sysops@node2 ~]$

7) Install and Configure Apache Web Server (HTTP)

Install apache web server (httpd) on both the servers, run following dnf command

$ sudo dnf install -y httpd wget

Also allow Apache ports in firewall, run following firewall-cmd command on both servers

$ sudo firewall-cmd --permanent --zone=public --add-service=http $ sudo firewall-cmd --permanent --zone=public --add-service=https $ sudo firewall-cmd --reload

Create status.conf file on both the nodes in order for the Apache resource agent to get the status of Apache

$ sudo bash -c 'cat <<-END > /etc/httpd/conf.d/status.conf <Location /server-status> SetHandler server-status Require local </Location> END' $

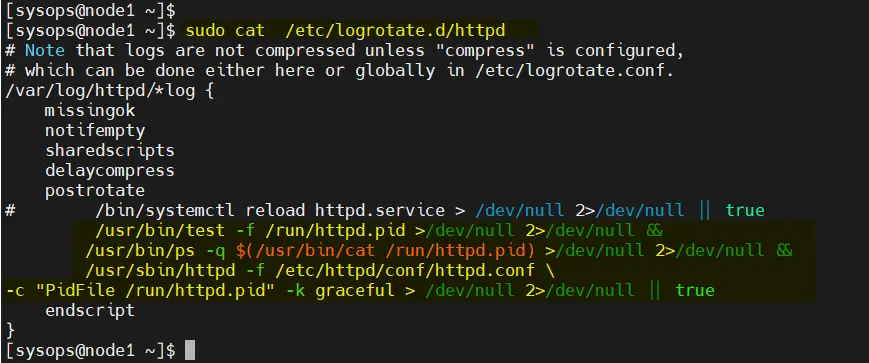

Modify /etc/logrotate.d/httpd on both the nodes

Replace the below line

/bin/systemctl reload httpd.service > /dev/null 2>/dev/null || true

With three lines

/usr/bin/test -f /run/httpd.pid >/dev/null 2>/dev/null && /usr/bin/ps -q $(/usr/bin/cat /run/httpd.pid) >/dev/null 2>/dev/null && /usr/sbin/httpd -f /etc/httpd/conf/httpd.conf \ -c "PidFile /run/httpd.pid" -k graceful > /dev/null 2>/dev/null || true

Save and exit the file.

8) Create a sample web page for Apache

Perform the following commands only on node1,

$ sudo lvchange -ay vg01/lv01 $ sudo mount /dev/vg01/lv01 /var/www/ $ sudo mkdir /var/www/html $ sudo mkdir /var/www/cgi-bin $ sudo mkdir /var/www/error $ sudo bash -c ' cat <<-END >/var/www/html/index.html <html> <body>High Availability Apache Cluster - Test Page </body> </html> END' $ $ sudo umount /var/www

Note: If SElinux is enable, then run following on both the servers,

$ sudo restorecon -R /var/www

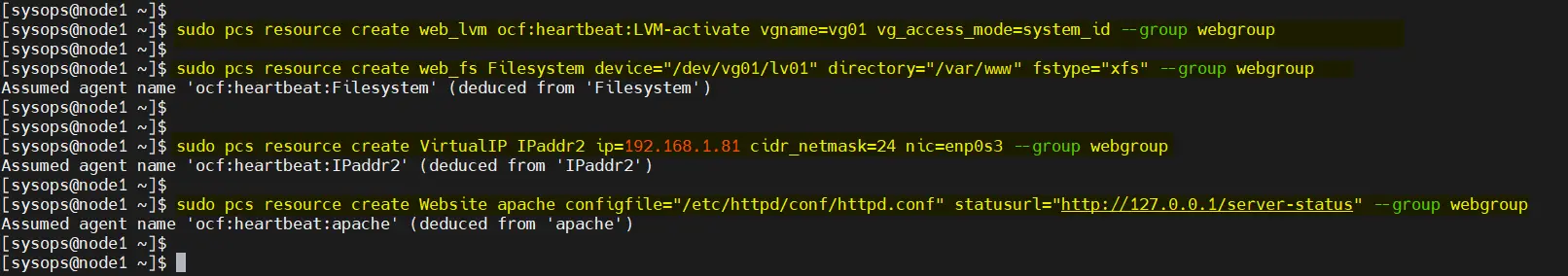

9) Create cluster resources and resource group

Define resource group and cluster resources for clusters. In my case, we are using “webgroup” as resource group.

- web_lvm is name of resource for shared lvm volume (/dev/vg01/lv01)

- web_fs is name of filesystem resource which will be mounted on /var/www

- VirtualIP is the resource for VIP (IPadd2) for nic enp0s3

- Website is the resource of Apache config file.

Execute following set of commands from any node.

$ sudo pcs resource create web_lvm ocf:heartbeat:LVM-activate vgname=vg01 vg_access_mode=system_id --group webgroup $ sudo pcs resource create web_fs Filesystem device="/dev/vg01/lv01" directory="/var/www" fstype="xfs" --group webgroup $ sudo pcs resource create VirtualIP IPaddr2 ip=192.168.1.81 cidr_netmask=24 nic=enp0s3 --group webgroup $ sudo pcs resource create Website apache configfile="/etc/httpd/conf/httpd.conf" statusurl="http://127.0.0.1/server-status" --group webgroup

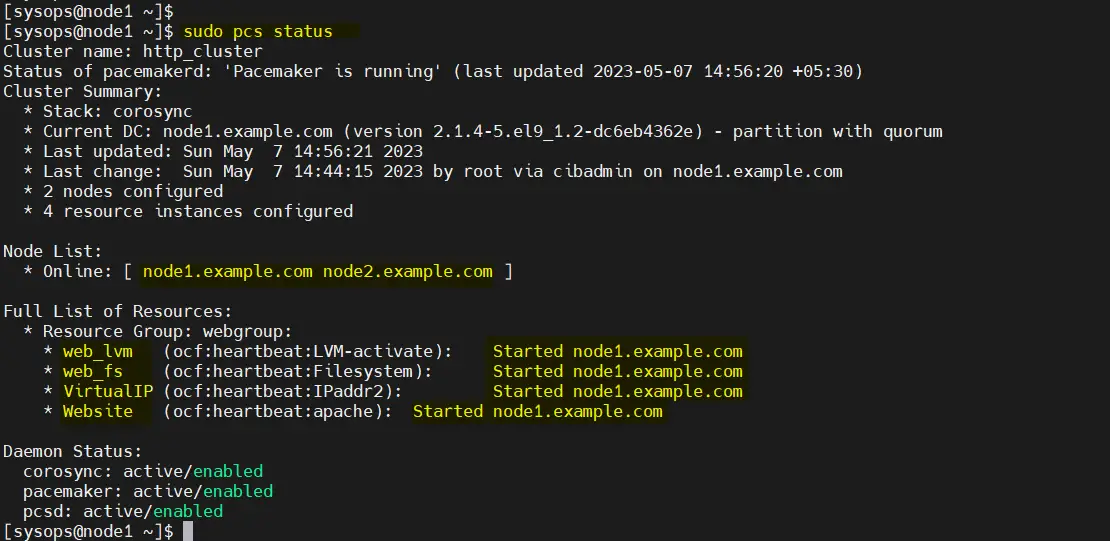

Now verify the cluster resources status, run

$ sudo pcs status

Great, output above shows that all the resources are started on node1.

10) Test Apache Cluster

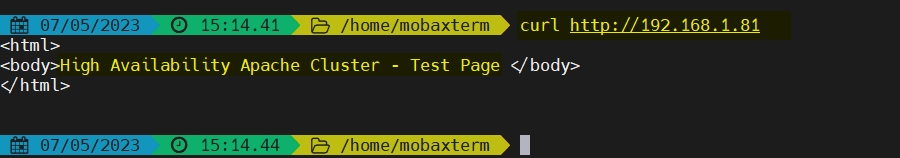

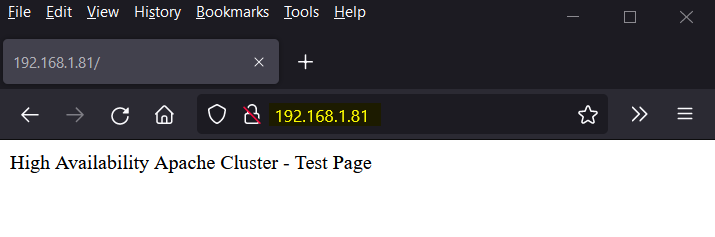

Try to access web page using VIP – 192.168.1.81

Either use curl command or web browser to access web page

$ curl http://192.168.1.81

or

Perfect, above output confirms that we are able access the web page of our highly available Apache cluster.

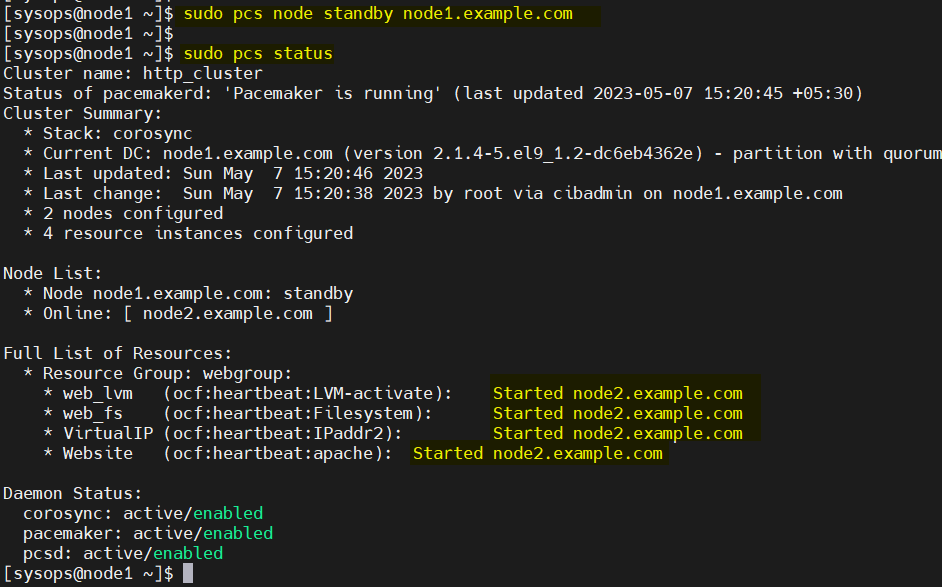

Let’s try to move cluster resources from node1 to node2, run

$ sudo pcs node standby node1.example.com $ sudo pcs status

Perfect, above output confirms that cluster resources are migrated from node1 to node2.

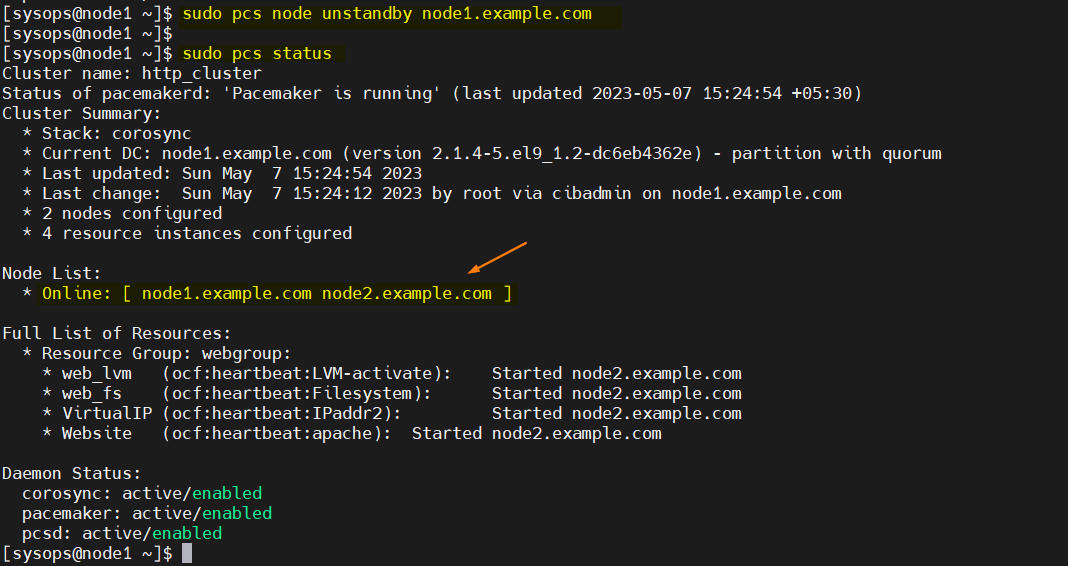

To remove the node (node1.example.com) from standby, run beneath command

$ sudo pcs node unstandby node1.example.com

That’s all from this post, I hope you have found it informative, kindly do post your queries and feedback in below comments section.

Hi, I followed your guide and it works well for the most part. However I was wondering how you can change the html content after you make a resource of it in pacemaker. Thanks.

Hi, nice guide, but I have a small problem. I am totally followed your guide, but in the end, node 2 doesn’t have partition storage part1, how to fix this problem? hope somebody can help me.

thanks

Hi, Your question has much info to help troubleshot. Please provide info, such command out. For example:

# lvdisplay /dev/sdb

# lvs

# blkid

# ls -lh /dev/mapper

Sorry for the typos. I meant to say that please provide us with enough information as you have not provided adequate info in the initial question.

Beautiful guide. But if I need to extend the disk dynamically I will not have it. Because the disk you are with fixed size and not in lvm how to solve this

Hi Leandro,

It is recommended to use LVM on shared storage in cluster setup. You can’t extend the disk dynamically unless and until it is on LVM

This was a very well written guide that worked for me. Thanks for making it!

pcs stonith show

scsi_fecing_device (stonith:fence_scsi): Stopped

Hi Padeep Kumar, a job well done. I followed your instructions and I was able to setup my cluster. Cluster resource manages my httpd service. My question is, how do I restart the httpd service everytime I do changes on the configuration?

Hi Pradeep,

We are planning to purchase SSL certificate for Apache Webserver which is running in cluster. How to use SSL certificate for Virtaul IP, While generating CSR file CN should be Virtual IP or fqdn?

Hi Santosh,

It should be FQDN not the Virtual IP.

if the website is on CPanel / WHM , how could i get it done ?