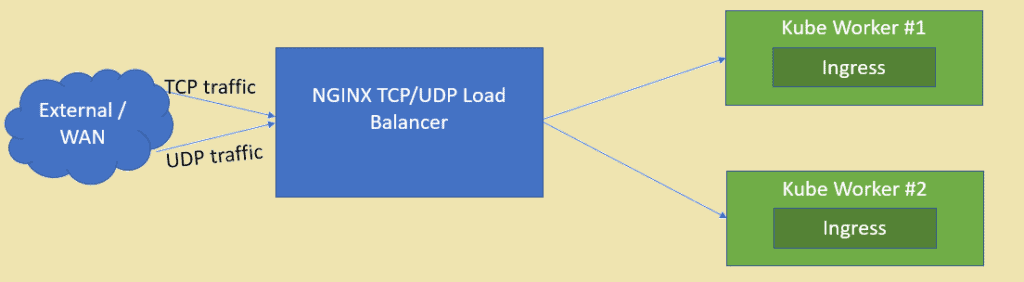

As we know NGINX is one of the highly rated open source web server but it can also be used as TCP and UDP load balancer. One of the main benefits of using nginx as load balancer over the HAProxy is that it can also load balance UDP based traffic. In this article we will demonstrate how NGINX can be configured as Load balancer for the applications deployed in Kubernetes cluster.

I am assuming Kubernetes cluster is already setup and it is up and running, we will create a VM based on CentOS / RHEL for NGINX.

Following are the lab setup details:

- NGINX VM (Minimal CentOS / RHEL) – 192.168.1.50

- Kube Master – 192.168.1.40

- Kube Worker 1 – 192.168.1.41

- Kube worker 2 – 192.168.1.42

Let’s jump into the installation and configuration of NGINX, in my case I am using minimal CentOS 8 for NGINX.

Step 1) Enable EPEL repository for nginx package

Login to your CentOS 8 system and enable epel repository because nginx package is not available in the default repositories of CentOS / RHEL.

[linuxtechi@nginxlb ~]$ sudo dnf install epel-release -y

Step 2) Install NGINX with dnf command

Run the following dnf command to install nginx,

[linuxtechi@nginxlb ~]$ sudo dnf install nginx -y

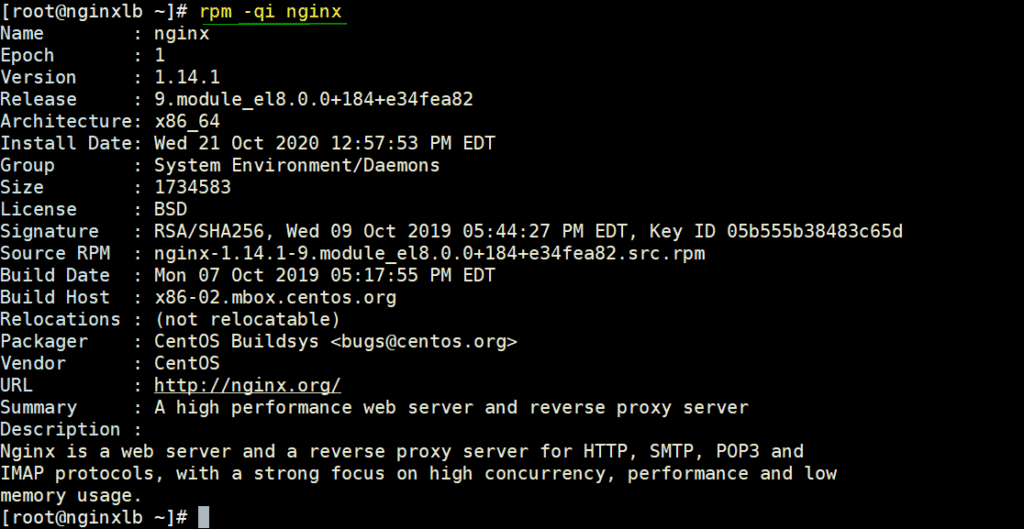

Verify NGINX details by running beneath rpm command,

# rpm -qi nginx

Allow NGINX ports in firewall by running beneath commands

[root@nginxlb ~]# firewall-cmd --permanent --add-service=http [root@nginxlb ~]# firewall-cmd --permanent --add-service=https [root@nginxlb ~]# firewall-cmd –reload

Set the SELinux in permissive mode using the following commands,

[root@nginxlb ~]# sed -i s/^SELINUX=.*$/SELINUX=permissive/ /etc/selinux/config [root@nginxlb ~]# setenforce 0 [root@nginxlb ~]#

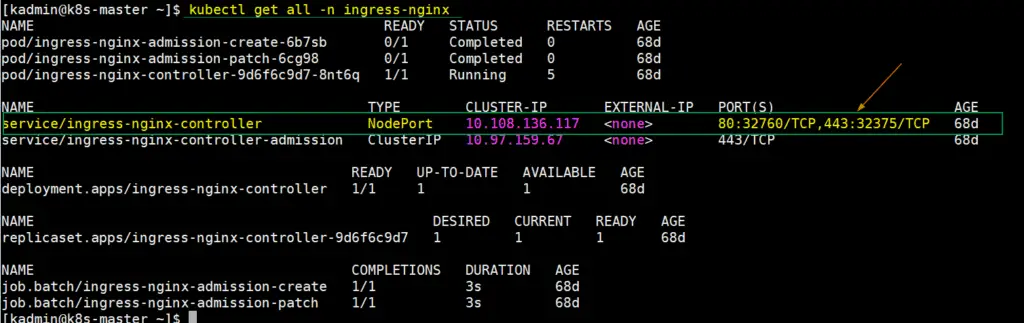

Step 3) Extract NodePort details for ingress controller from Kubernetes setup

In Kubernetes, nginx ingress controller is used to handle incoming traffic for the defined resources. When we deploy ingress controller then at that time a service is also created which maps the host node ports to port 80 and 443. These host node ports are opened from each worker node. To get this detail, login to kube master node or control plan and run,

$ kubectl get all -n ingress-nginx

As we can see the output above, NodePort 32760 of each worker nodes is mapped to port 80 and NodePort 32375 are mapped to 443 port. We will use these node ports in Nginx configuration file for load balancing tcp traffic.

Step 4) Configure NGINX to act as TCP load balancer

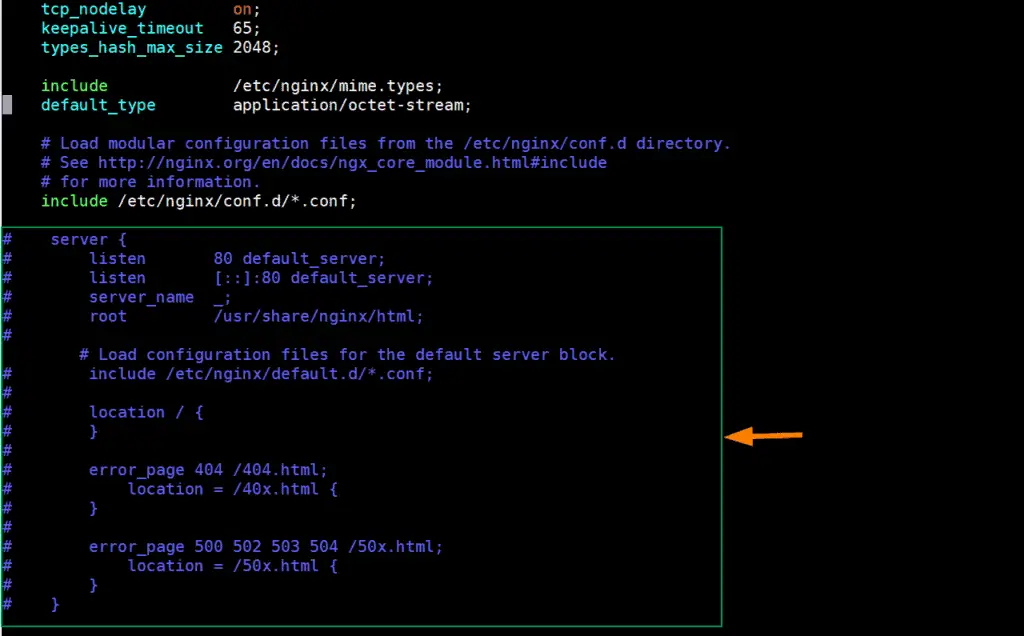

Edit the nginx configuration file and add the following contents to it,

[root@nginxlb ~]# vim /etc/nginx/nginx.conf

Comments out the Server sections lines (Starting from 38 to 57) and add following lines,

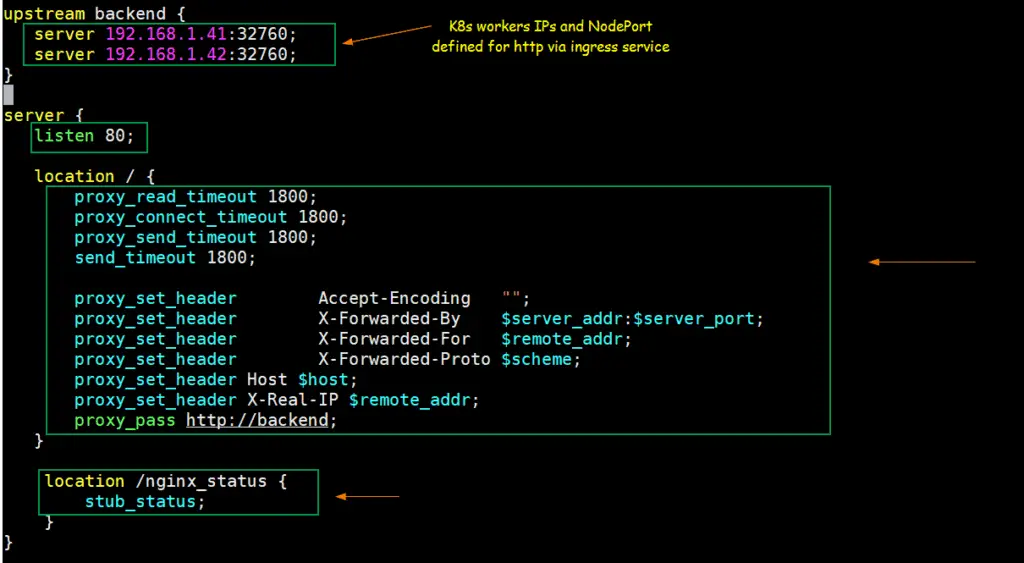

upstream backend {

server 192.168.1.41:32760;

server 192.168.1.42:32760;

}

server {

listen 80;

location / {

proxy_read_timeout 1800;

proxy_connect_timeout 1800;

proxy_send_timeout 1800;

send_timeout 1800;

proxy_set_header Accept-Encoding "";

proxy_set_header X-Forwarded-By $server_addr:$server_port;

proxy_set_header X-Forwarded-For $remote_addr;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_pass http://backend;

}

location /nginx_status {

stub_status;

}

}

Save & exit the file.

As per the above changes, when any request comes on port 80 on nginx server IP then it will be routed to Kubernetes worker nodes IPs (192.168.1.41/42) on NodePort (32760).

Let’s start and enable NGINX service using following commands,

[root@nginxlb ~]# systemctl start nginx [root@nginxlb ~]# systemctl enable nginx

Test NGINX for TCP Load balancer

To test whether nginx is working fine or not as TCP load balancer for Kubernetes, deploy nginx based deployment, expose the deployment via service and defined an ingress resource for nginx deployment. I have used following commands and yaml file to deploy these Kubernetes objects,

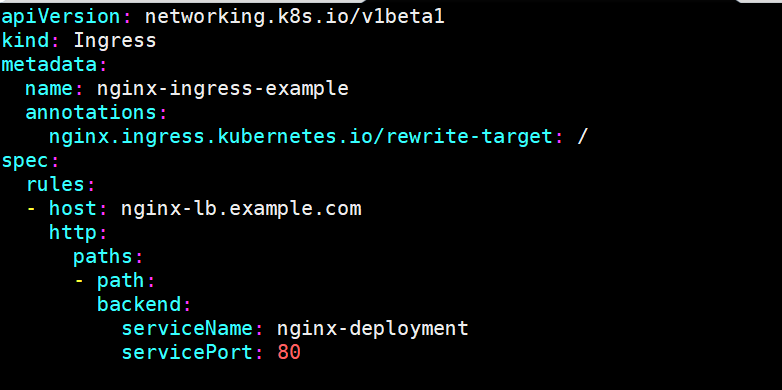

[kadmin@k8s-master ~]$ kubectl create deployment nginx-deployment --image=nginx deployment.apps/nginx-deployment created [kadmin@k8s-master ~]$ kubectl expose deployments nginx-deployment --name=nginx-deployment --type=NodePort --port=80 service/nginx-deployment exposed [kadmin@k8s-master ~]$ [kadmin@k8s-master ~]$ vi nginx-ingress.yaml

[kadmin@k8s-master ~]$ kubectl create -f nginx-ingress.yaml ingress.networking.k8s.io/nginx-ingress-example created [kadmin@k8s-master ~]$

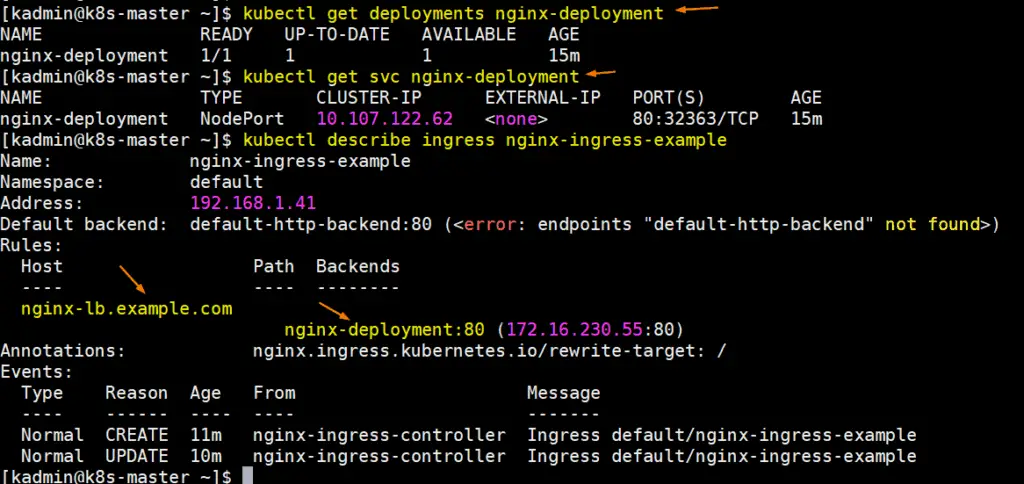

Run following commands to get deployment, svc and ingress details:

Perfect, let update your system’s host file so that nginx-lb.example.com points to nginx server’s ip address (192.168.1.50)

192.168.1.50 nginx-lb.example.com

Let’s try to ping the url to confirm that it points to NGINX Server IP,

# ping nginx-lb.example.com Pinging nginx-lb.example.com [192.168.1.50] with 32 bytes of data: Reply from 192.168.1.50: bytes=32 time<1ms TTL=64 Reply from 192.168.1.50: bytes=32 time<1ms TTL=64

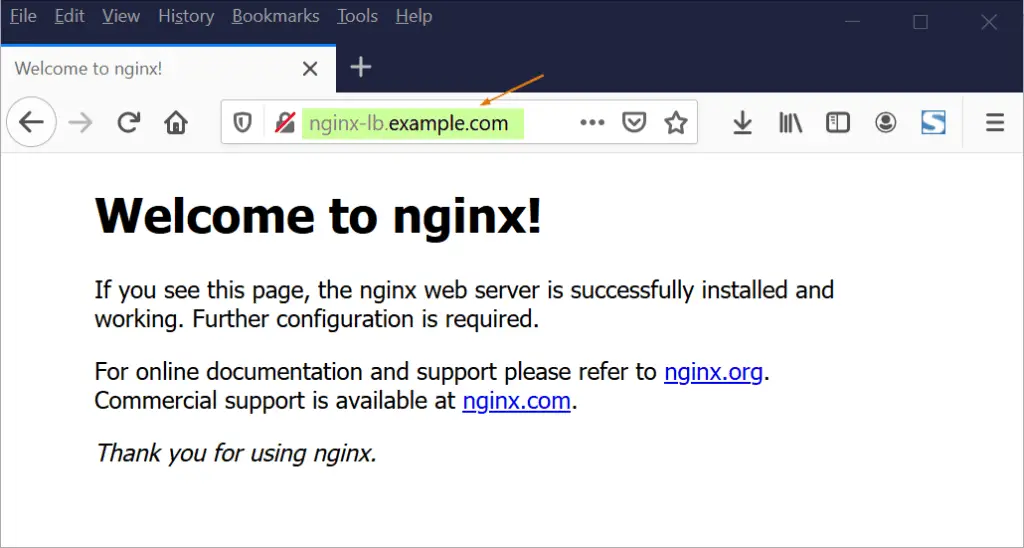

Now try to access the URL via web browser,

Great, above confirms that NGINX is working fine as TCP load balancer because it is load balancing tcp traffic coming on port 80 between K8s worker nodes.

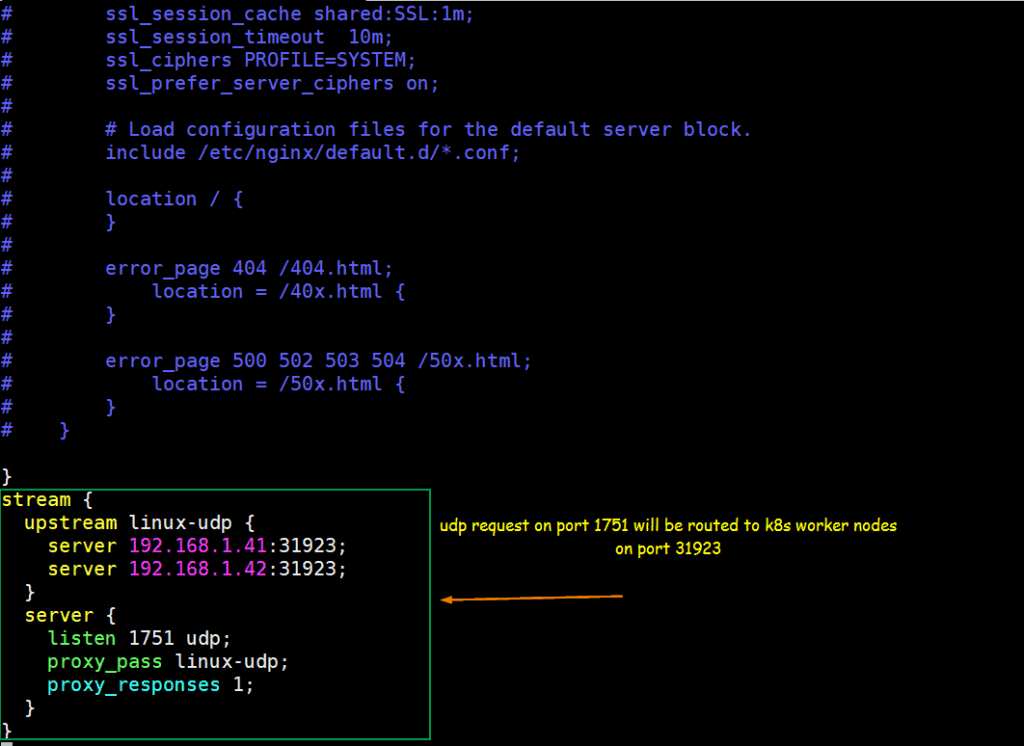

Step 5) Configure NGINX to act as UDP Load Balancer

Let’s suppose we have an UDP based application running inside the Kubernetes, application is exposed with UDP port 31923 as NodePort type. We will configure NGINX to load balance the UDP traffic coming on port 1751 to NodePort of k8s worker nodes.

Let’s assume we have already running a pod named “linux-udp-port” in which nc command is available, expose it via service on UDP port 10001 as NodePort type.

[kadmin@k8s-master ~]$ kubectl expose pod linux-udp-pod --type=NodePort --port=10001 --protocol=UDP service/linux-udp-pod exposed [kadmin@k8s-master ~]$ [kadmin@k8s-master ~]$ kubectl get svc linux-udp-pod NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE linux-udp-pod NodePort 10.96.6.216 <none> 10001:31923/UDP 19m [kadmin@k8s-master ~]$

To configure NGINX as UDP load balancer, edit its configuration file and add the following contents at end of file

[root@nginxlb ~]# vim /etc/nginx/nginx.conf

……

stream {

upstream linux-udp {

server 192.168.1.41:31923;

server 192.168.1.42:31923;

}

server {

listen 1751 udp;

proxy_pass linux-udp;

proxy_responses 1;

}

……

Save and exit the file and restart nginx service using following command,

[root@nginxlb ~]# systemctl restart nginx

Allow UDP port 1751 in firewall by running following command

[root@nginxlb ~]# firewall-cmd --permanent --add-port=1751/udp [root@nginxlb ~]# firewall-cmd --reload

Test UDP Load balancing with above configured NGINX

Login to the POD and start a dummy service which listens on UDP port 10001,

[kadmin@k8s-master ~]$ kubectl exec -it linux-udp-pod -- bash root@linux-udp-pod:/# nc -l -u -p 10001

Leave this as it is, login to the machine from where you want to test UDP load balancing, make sure NGINX server is reachable from that machine, run the following command to connect to udp port (1751) on NGINX Server IP and then try to type the string

# nc -u 192.168.1.50 1751

[root@linux-client ~]# nc -u 192.168.1.50 1751 Hello, this UDP LB testing

Now go to POD’s ssh session, there we should see the same message,

root@linux-udp-pod:/# nc -l -u -p 10001 Hello, this UDP LB testing

Perfect above output confirms that, UDP load balancing is working fine with NGINX. That’s all from this article, I hope you find this informative and helps you to setup NGINX Load balancer. Please don’t hesitate to share your technical feedback in the comments section below.

I am not sure I understand your first config.

You are listening on 80, then proxying to http:// , plus you are changing some http header.

How can it be at TCP level ?

Yes, it is real time example. In Kubernetes if you want to load balance http traffic coming towards PODs from outside then nginx can be used as S/W Load balancer which sits in front of K8s cluster. Here Port 80 is an example tcp protocol used for serving web pages.