In this tutorial, we will explore Kubernetes Networking: Services, Ingress, and DNS explained to help you understand how networking works in a Kubernetes cluster.

Kubernetes has become the de facto standard for deploying and managing containerized applications on a scale. While its power for scheduling and resource management is well known, the underlying network model often seems daunting to many newcomers. This blog post aims to make you understand the key networking elements of Kubernetes: Services, Ingress, and DNS, giving you a basic understanding of how applications communicate within and outside of clusters.

At the heart of Kubernetes is the Pod – the smallest unit of deployment; Pods are designed to be transient; they can be dynamically created, destroyed, and rescheduled between nodes in a cluster. Each Pod has its own unique IP address, which is unique in the cluster’s cyberspace. However, this poses a significant challenge: how can other Pods or external clients reliably communicate with a particular application entity when that application’s IP address can change at any time? This is where Kubernetes’ network abstraction comes into play.

Services

Think of a service as a stable virtual IP address and DNS name that points to a healthy set of pods even if they are created, destroyed, or moved. it acts as a load balancer and discovery mechanism.

When you define a service, you specify selectors that match the labels on the Pod. The service then continuously monitors Pods with these labels, and if new Pods matching the selectors are created, they are automatically added to the service’s endpoint list. If the Pod is terminated, they are removed.

Kubernetes offers several service types, each designed for a specific communication model:

- ClusterIP: This is the default type. It opens the service on an internal IP address in the cluster. This service can only be accessed from within the cluster. It is well suited for internal microservice communication (e.g., a front-end Pod talking to a back-end Pod).

- NodePort: This type exposes the service on a static port at each Node IP address. This allows the outside of the cluster to connect to the service via NodeIP:NodePort. While simple, it is not ideal for production as it increases the attack surface and provides poor scalability.

- Load Balancer: Available when running on a cloud provider (AWS, GCP, Azure, etc.). This type provides an external cloud load balancer, which then routes the external traffic to your service. This is the standard way to expose public-facing applications.

- ExternalName: This service type maps services to DNS names instead of pod sets. It is used for services outside the Kubernetes cluster (for example, external databases).

Ingress

Ingress is a Kubernetes API object that manages external access to services within the cluster, typically HTTP and HTTPS. it is a collection of routing rules that allow you to expose multiple services under a single IP address, typically a single LoadBalancer.

Think of Ingress as a smart router at the edge of the cluster. Instead of having each mass-facing service have its own LoadBalancer (which can be costly), Ingress lets you define rules to route traffic to different back-end services based on host names or URL paths.

The Ingress resource itself doesn’t do anything; it requires an Ingress Controller to run in your cluster. Popular Ingress Controllers include the NGINX Ingress Controller, Traefik, HAProxy, and cloud provider-specific Ingress Controllers. Ingress Controllers monitor Ingress resources and configure themselves according to their own rules (for example, setting NGINX proxy rules). Ingress Controllers monitor Ingress resources and configure themselves according to their rules (e.g., setting up NGINX proxy rules) to direct incoming external traffic to the appropriate back-end services.

Key benefits of using Ingress:

Single entry point: Consolidate multiple services behind a single external IP address.

- Path-based routing: Route yourdomain.com/api to a back-end service and yourdomain.com/app to a front-end service.

- Host-based routing: Routes api.yourdomain.com to one service and app.yourdomain.com to another.

- SSL Termination: Handles HTTPS traffic at the Ingress Controller level, simplifying credential management for application pods.

DNS

Kubernetes relies heavily on DNS to discover services. When a Pod needs to communicate with another service in the cluster, it doesn’t need to know the ClusterIP of that service; instead, it can use the DNS name of the service.

Each service automatically obtains a DNS record. For a service named my-service in the my-namespace namespace, it can be discovered as my-service.my-namespace.svc.cluster.local. In the same namespace, you can usually just use my-service.

This DNS-based discovery is important because of the following:

- Decoupling: Pods don’t need hardware to encode IP addresses.

- Flexibility: The DNS name of the service remains the same even if the underlying Pod changes.

- Scalability: When the service scales horizontally (adding more Pods), the DNS name still points to the service and then load balances across all healthy Pods.

Kubernetes clusters typically run DNS servers as pods (such as CoreDNS). This DNS server intercepts DNS requests from other Pods, resolves the internal service name to the corresponding ClusterIP, and forwards the external DNS request to the upstream DNS server.

Example 1: Expose Services inside Cluster

ClusterIP is used for intra-cluster communication. Other Pods can connect to our NGINX application through this stable DNS name.

Prerequisite: Of course, A running kubernetes cluster. You can use k3s or minikube to spin up a local Kubernetes cluster.

First, we need to define our Pods. We will use a Deployment to manage multiple replicas of our NGINX server.

The deployment creates and manages Pods, each with its own staging IP. The service (nginx-internal-service) uses a selector to find all Pods with the app: nginx tag. It gets a stable ClusterIP and DNS name (nginx-internal-service). kube The -proxy ensures that IP/port traffic for this service is load balanced to the actual Pod IP.

# deployment.yaml apiVersion: apps/v1 kind: Deployment metadata: name: nginx-deployment labels: app: nginx spec: replicas: 3 # We want 3 instances of our NGINX server selector: matchLabels: app: nginx template: metadata: labels: app: nginx # This label is crucial for the Service to select these Pods spec: containers: - name: nginx image: nginx:latest ports: - containerPort: 80 # NGINX listens on port 80

Apply this:

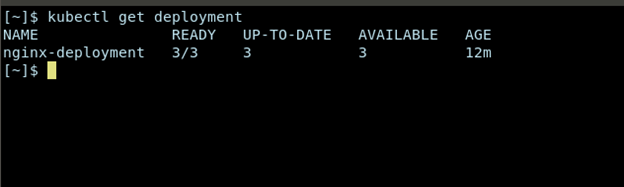

kubectl apply -f deployment.yaml kubectl get pods -l app=nginx kubectl get deployment

Caution: It might take 2-4 minutes to get all deployment available for use. In case, you’re using k3s and stuck with PodPending state, deleting the directory /var/lib/rancher/k3s would reset the local k3s cluster and restarting would resolve the issue.

You will see three Pods, each with a unique IP address (e.g. 10.42.0.5, 10.42.0.6, 10.42.0.7). These IP addresses are internal to the cluster and may change if the Pod is restarted.

Let’s create a ClusterIP service.

# clusterip-service.yaml apiVersion: v1 kind: Service metadata: name: nginx-internal-service # DNS name will be 'nginx-internal-service' spec: selector: app: nginx # This matches the label on our NGINX Pods ports: - protocol: TCP port: 80 # The port on the Service targetPort: 80 # The port on the Pod/Container type: ClusterIP # Default, but explicitly stated for clarity

Apply this:

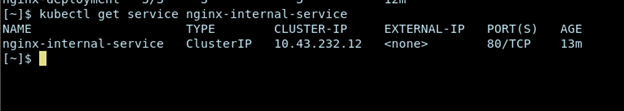

kubectl apply -f clusterip-service.yaml kubectl get service nginx-internal-service

You will get an output similar to this:

Verify DNS resolution

CLUSTER-IP (e.g. 10.43.232.12) is a stable virtual IP address. Any Pod in the cluster can now connect to one of the NGINX Pods by connecting to nginx-internal-service (DNS name) or 10.43.232.12 (port 80).

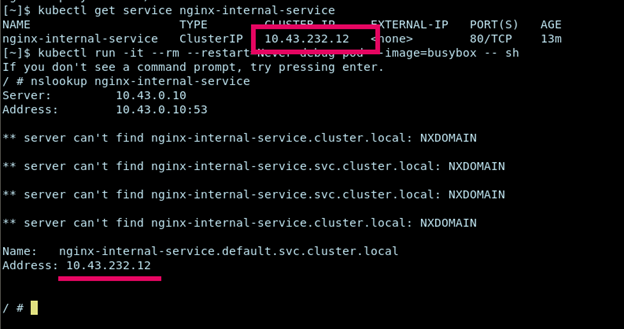

Let’s launch a temporary Pod and try to resolve the Service’s DNS name:

kubectl run -it --rm --restart=Never debug-pod --image=busybox -- sh

Inside the busybox Pod’s shell, run this command:

nslookup nginx-internal-service

This should resolve to the ClusterIP of nginx-internal-service.

This shows how Kubernetes’ DNS system automatically provides service discovery. You can then wget http://nginx-internal-service to open the NGINX web server.

Example 2: Setup Ingress

To allow our NGINX applications to connect to the Internet over HTTP/HTTPS, we use Ingress.

Ingress (nginx-ingress) definition rules (linuxtechi.com) to route external HTTP/HTTPS traffic. The Ingress Controller watches this resource and configures an external LoadBalancer to direct linuxtechi.com traffic to the nginx-internal-service on port 80.

The cluster’s internal DNS (CoreDNS) ensures that Pods attempting to access the nginx-internal-service resolve to the correct and stable ClusterIP. an external DNS record (managed outside of Kubernetes) maps linuxtechi.com to the public IP of the Ingress Controller’s public IP.

Prerequisite: Of course, A running Kubernetes cluster. An Ingress Controller must be running in your cluster.

# ingress.yaml apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: nginx-ingress annotations: # This annotation is specific to the NGINX Ingress Controller for rewrite nginx.ingress.kubernetes.io/rewrite-target: / spec: rules: - host: linuxtechi.com # This is the domain you want to use http: paths: - path: / # Route all traffic on this path pathType: Prefix # Matches any path starting with '/' backend: service: name: nginx-internal-service # Point to our ClusterIP Service port: number: 80 # The port of the Service to route to

You typically need to set up a DNS provider (e.g., GoDaddy, Cloudflare) to point linuxtechi.com to the external IP address of the Ingress Controller’s LoadBalancer service.

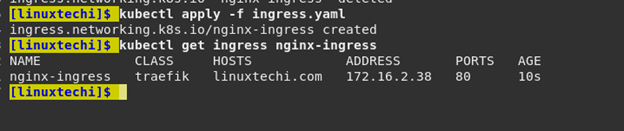

Apply Ingress:

kubectl apply -f ingress.yaml kubectl get ingress nginx-ingress

The ADDRESS field (172.16.2.38) will be the external IP address provided by your cloud provider’s LoadBalancer or your Ingress Controller. If you are running locally like Minikube, you may get 127.0.0.1 and need to use a minikube tunnel).

Now, if you have DNS set up, navigating to http://linuxtechi.com in your web browser will show you the NGINX welcome page, and Ingress will handle routing to your nginx-internal-service, which will then load-balance traffic across your NGINX Pods.

Conclusion

The Kubernetes network’s interactions with Pod IPs, Services, Ingress, and DNS form the backbone of highly available and scalable containerized applications. By understanding these core concepts – how Services provide stable endpoints for staging pods, how Ingress orchestrates external HTTP/HTTPS traffic, and how DNS enables seamless service discovery – you’ll be empowered to effectively design, deploy, and troubleshoot applications in a Kubernetes cluster. These fundamentals are critical for anyone venturing into the world of cloud-native application deployment.