Network Bonding is the aggregation of multiple lan cards(eth0 & eth1) into a single interface called bond interface. Network Bonding is a kernel feature and also known as NIC teaming. Bonding is generally required to provide high availability and load balancing services.

In this tutorial we will configure network bonding on CentOS 6.X and RHEL 6.X , in my case i have two Lan Cards (eth0 & eth1) & will create bond interface (bond0).

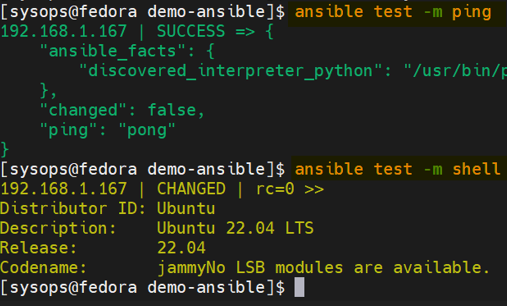

Step:1 Create the bond file ( ifcfg-bond0 ) and specify the ip address, netmask & gateway

# vi /etc/sysconfig/network-scripts/ifcfg-bond0 DEVICE=bond0 IPADDR=192.168.1.9 NETMASK=255.255.255.0 GATEWAY=192.168.1.1 TYPE=Bond ONBOOT=yes NM_CONTROLLED=no BOOTPROTO=static

Step:2 Edit the files of eth0 & eth1 and make sure you enter the master and slave entry, as shown below

# vi /etc/sysconfig/network-scripts/ifcfg-eth0 DEVICE=eth0 HWADDR=08:00:27:5C:A8:8F TYPE=Ethernet ONBOOT=yes NM_CONTROLLED=no MASTER=bond0 SLAVE=yes # vi /etc/sysconfig/network-scripts/ifcfg-eth1 DEVICE=eth1 TYPE=Ethernet ONBOOT=yes NM_CONTROLLED=no MASTER=bond0 SLAVE=yes

Step:3 Create the Bond file(bonding.conf)

# vi /etc/modprobe.d/bonding.conf alias bond0 bonding options bond0 mode=1 miimon=100

Different Modes used in bonding.conf file .

- balance-rr or 0 — round-robin mode for fault tolerance and load balancing.

- active-backup or 1 — Sets active-backup mode for fault tolerance.

- balance-xor or 2 — Sets an XOR (exclusive-or) mode for fault tolerance and load balancing.

- broadcast or 3 — Sets a broadcast mode for fault tolerance. All transmissions are sent on all slave interfaces.

- 802.3ad or 4 — Sets an IEEE 802.3ad dynamic link aggregation mode. Creates aggregation groups that share the same speed & duplex settings.

- balance-tlb or 5 — Sets a Transmit Load Balancing (TLB) mode for fault tolerance & load balancing.

- balance-alb or 6 — Sets an Active Load Balancing (ALB) mode for fault tolerance & load balancing.

Step:4 Now Restart the network Service

# service network restart

Using below command to Check the bond Interface

root@localhost ~]# ifconfig bond0 bond0 Link encap:Ethernet HWaddr 08:00:27:5C:A8:8F inet addr:192.168.1.9 Bcast:192.168.1.255 Mask:255.255.255.0 inet6 addr: fe80::a00:27ff:fe5c:a88f/64 Scope:Link UP BROADCAST RUNNING MASTER MULTICAST MTU:1500 Metric:1 RX packets:6164 errors:0 dropped:0 overruns:0 frame:0 TX packets:1455 errors:0 dropped:0 overruns:0 carrier:0 collisions:0 txqueuelen:0 RX bytes:482336 (471.0 KiB) TX bytes:271221 (264.8 KiB

Step:5 Verify the Status of bond interface.

[root@localhost ~]# cat /proc/net/bonding/bond0 Ethernet Channel Bonding Driver: v3.6.0 (September 26, 2009) Bonding Mode: fault-tolerance (active-backup) Primary Slave: None Currently Active Slave: eth0 MII Status: up MII Polling Interval (ms): 100 Up Delay (ms): 0 Down Delay (ms): 0 Slave Interface: eth0 MII Status: up Speed: 1000 Mbps Duplex: full Link Failure Count: 0 Permanent HW addr: 08:00:27:5c:a8:8f Slave queue ID: 0 Slave Interface: eth1 MII Status: up Speed: 1000 Mbps Duplex: full Link Failure Count: 0 Permanent HW addr: 08:00:27:7f:04:49 Slave queue ID: 0

Note: As per the output shown above , we are currently using active-backup bonding. To do the testing just disable one interface and check whether you are still accessing your machine using the bond ip.

When I did one of the nic(eth0) down, bonding stopped working but /proc/net/bonding/bond0 was showing everything fine and bond0 was showing IP too. As soon as I enabled eth0, server again started pinging. Please help

Hi Navneet ,

Please check whether second interface like eth1 is detected and its link is up using the command “ethtool eth1”.

I’m noticing in RHEL documentation that they are recommending *NOT* to use modeprobe.conf with later RHEL releases – they recommend using “BONDING_OPTS=” in the ifcfg-bond[#] file instead so that it’s all in one place. It is supposed to work in later kernel releases.

Hi,

For round-robin if have some problem. if I down the the nic1 from the console there is no interruption in my putty session, but if I down nic2 then my putty session becoming in active until I restart the network service. Do we really need to restart the network service once the nic goes bad?

Hi Kiran,

Are you testing bonding on Virtual Machines hosted on Virtual Box …?

If you want to achieve fault tolerance and load balancing then either go for mode 5 or mode 6. As the name suggests in round robin mode packets will flow in round robin way.