Kubernetes is a free and open source container orchestration tool. It is used to deploy container based applications automatically in cluster environment, apart from this it also used to manage Docker containers across the kubernetes cluster hosts. Kubernetes is also Known as K8s.

In this article I will demonstrate how to install and configure two node Kubernetes (1.13) using kubeadm on Ubuntu 18.04 / 18.10 systems. Following are the details of my lab setup:

I will be using three Ubuntu 18.04 LTS system, where one system will act as Kubernetes Master Node and other two nodes will act as Slave node and will join the Kubernetes cluster. I am assuming minimal 18.04 LTS is installed on these three systems.

- Kubernetes Master Node – (Hostname: k8s-master , IP : 192.168.1.70, OS : Minimal Ubuntu 18.04 LTS)

- Kubernetes Slave Node 1 – (Hostname: k8s-worker-node1, IP: 192.168.1.80 , OS : Minimal Ubuntu 18.04 LTS)

- Kubernetes Slave Node 2 – (Hostname: k8s-worker-node2, IP: 192.168.1.90 , OS : Minimal Ubuntu 18.04 LTS)

Note: Kubernetes Slave Node is also known as Worker Node

Let’s jump into the k8s installation and configuration steps.

Step:1) Set Hostname and update hosts file

Login to the master node and configure its hostname using the hostnamectl command

linuxtechi@localhost:~$ sudo hostnamectl set-hostname "k8s-master" linuxtechi@localhost:~$ exec bash linuxtechi@k8s-master:~$

Login to Slave / Worker Nodes and configure their hostname respectively using the hostnamectl command,

linuxtechi@localhost:~$ sudo hostnamectl set-hostname k8s-worker-node1 linuxtechi@localhost:~$ exec bash linuxtechi@k8s-worker-node1:~$ linuxtechi@localhost:~$ sudo hostnamectl set-hostname k8s-worker-node2 linuxtechi@localhost:~$ exec bash linuxtechi@k8s-worker-node2:~$

Add the following lines in /etc/hosts file on all three systems,

192.168.1.70 k8s-master 192.168.1.80 k8s-worker-node1 192.168.1.90 k8s-worker-node2

Step:2) Install and Start Docker Service on Master and Slave Nodes

Run the below apt-get command to install Docker on Master node,

linuxtechi@k8s-master:~$ sudo apt-get install docker.io -y

Run the below apt-get command to install docker on slave nodes,

linuxtechi@k8s-worker-node1:~$ sudo apt-get install docker.io -y linuxtechi@k8s-worker-node2:~$ sudo apt-get install docker.io -y

Once the Docker packages are installed on all the three systems , start and enable the docker service using below systemctl commands, these commands needs to be executed on master and slave nodes.

~$ sudo systemctl start docker ~$ sudo systemctl enable docker Synchronizing state of docker.service with SysV service script with /lib/systemd/systemd-sysv-install. Executing: /lib/systemd/systemd-sysv-install enable docker ~$

Use below docker command to verify which Docker version has been installed on these systems,

~$ docker --version Docker version 18.06.1-ce, build e68fc7a ~$

Step:3) Configure Kubernetes Package Repository on Master & Slave Nodes

Note: All the commands in this step are mandate to run on master and slave nodes

Let’s first install some required packages, run the following commands on all the nodes including master node

~$ sudo apt-get install apt-transport-https curl -y

Now add Kubernetes package repository key using the following command,

:~$ curl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo apt-key add OK :~$

Now configure Kubernetes repository using below apt commands, at this point of time Ubuntu 18.04 (bionic weaver) Kubernetes package repository is not available, so we will be using Xenial Kubernetes package repository.

:~$ sudo apt-add-repository "deb http://apt.kubernetes.io/ kubernetes-xenial main"

Step:4) Disable Swap and Install Kubeadm on all the nodes

Note: All the commands in this step are mandate to run on master and slave nodes

Kubeadm is one of the most common method used to deploy kubernetes cluster or in other words we can say it used to deploy multiple nodes on a kubernetes cluster.

As per the Kubernetes Official web site, it is recommended to disable swap on all the nodes including master node.

Run the following command to disable swap temporary,

:~$ sudo swapoff -a

For permanent swap disable, comment out swapfile or swap partition entry in the /etc/fstab file.

Now Install Kubeadm package on all the nodes including master.

:~$ sudo apt-get install kubeadm -y

Once kubeadm packages are installed successfully, verify the kubeadm version using beneath command.

:~$ kubeadm version

kubeadm version: &version.Info{Major:"1", Minor:"13", GitVersion:"v1.13.2", GitCommit:"cff46ab41ff0bb44d8584413b598ad8360ec1def", GitTreeState:"clean", BuildDate:"2019-01-10T23:33:30Z", GoVersion:"go1.11.4", Compiler:"gc", Platform:"linux/amd64"}

:~$

Step:5) Initialize and Start Kubernetes Cluster on Master Node using Kubeadm

Use the below kubeadm command on Master Node only to initialize Kubernetes

linuxtechi@k8s-master:~$ sudo kubeadm init --pod-network-cidr=172.168.10.0/24

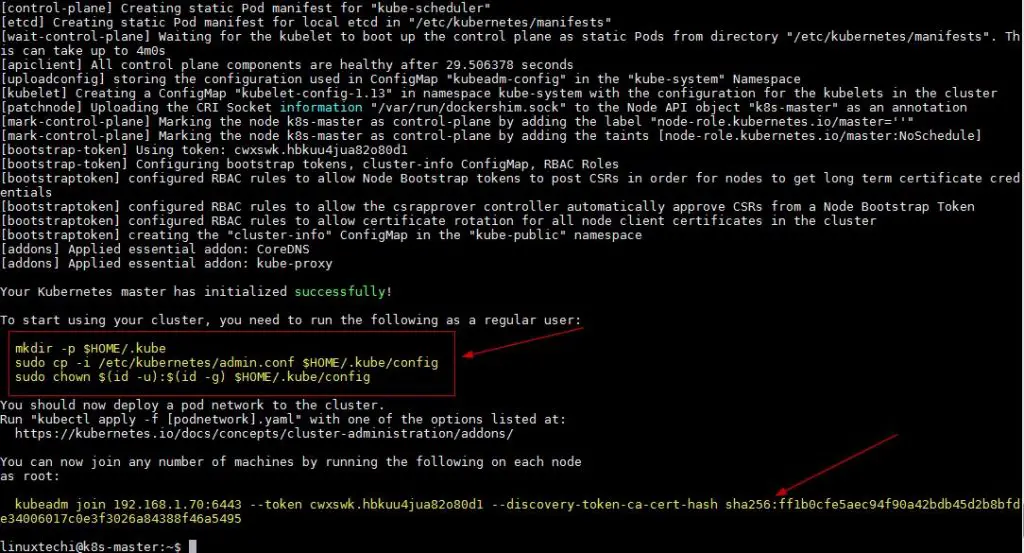

In the above command you can use the same pod network or choose your own pod network that suits to your environment. Once the command is executed successfully, we will get the output something like below,

Above output confirms that Master node has been initialized successfully, so to start the cluster run the beneath commands one after the another,

linuxtechi@k8s-master:~$ mkdir -p $HOME/.kube linuxtechi@k8s-master:~$ sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config linuxtechi@k8s-master:~$ sudo chown $(id -u):$(id -g) $HOME/.kube/config linuxtechi@k8s-master:~$

Verify the status of master node using the following command,

linuxtechi@k8s-master:~$ kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-master NotReady master 18m v1.13.2 linuxtechi@k8s-master:~$

As we can see in the above command output that our master node is not ready because as of now we have not deployed any pod.

Let’s deploy the pod network, Pod network is the network through which our cluster nodes will communicate with each other. We will deploy Flannel as our pod network, Flannel will provide the overlay network between cluster nodes.

Step:6) Deploy Flannel as Pod Network from Master node and verify pod namespaces

Execute the following kubectl command to deploy pod network from master node

linuxtechi@k8s-master:~$ sudo kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

Output of above command should be something like below

clusterrole.rbac.authorization.k8s.io/flannel created clusterrolebinding.rbac.authorization.k8s.io/flannel created serviceaccount/flannel created configmap/kube-flannel-cfg created daemonset.extensions/kube-flannel-ds-amd64 created daemonset.extensions/kube-flannel-ds-arm64 created daemonset.extensions/kube-flannel-ds-arm created daemonset.extensions/kube-flannel-ds-ppc64le created daemonset.extensions/kube-flannel-ds-s390x created linuxtechi@k8s-master:~$

Now verify the master node status and pod namespaces using kubectl command,

linuxtechi@k8s-master:~$ sudo kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-master Ready master 78m v1.13.2 linuxtechi@k8s-master:~$ linuxtechi@k8s-master:~$ sudo kubectl get pods --all-namespaces NAMESPACE NAME READY STATUS RESTARTS AGE kube-system coredns-86c58d9df4-px4sj 1/1 Running 0 79m kube-system coredns-86c58d9df4-wzdzk 1/1 Running 0 79m kube-system etcd-k8s-master 1/1 Running 1 79m kube-system kube-apiserver-k8s-master 1/1 Running 1 79m kube-system kube-controller-manager-k8s-master 1/1 Running 1 79m kube-system kube-flannel-ds-amd64-9tn8z 1/1 Running 0 14m kube-system kube-proxy-cjzz2 1/1 Running 1 79m kube-system kube-scheduler-k8s-master 1/1 Running 1 79m linuxtechi@k8s-master:~$

As we can see in the above output our master node status has changed to “Ready” and all the namespaces of pod are in running state, so this confirms that our master node is in healthy state and ready to form a cluster.

Step:7) Add Slave or Worker Nodes to the Cluster

Note: In Step 5, kubeadm command output we got complete command which we will have to use on slave or worker node to join a cluster

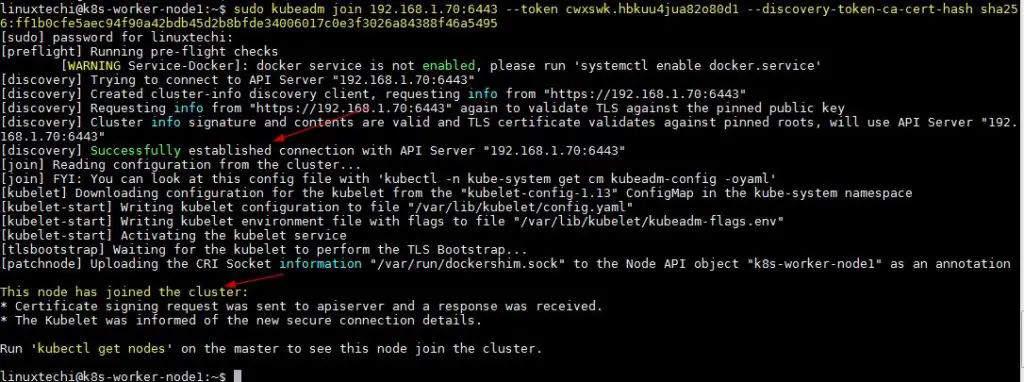

Login to first slave node (k8s-worker-node1) and run the following command to join the cluster,

linuxtechi@k8s-worker-node1:~$ sudo kubeadm join 192.168.1.70:6443 --token cwxswk.hbkuu4jua82o80d1 --discovery-token-ca-cert-hash sha256:ff1b0cfe5aec94f90a42bdb45d2b8bfde34006017c0e3f3026a84388f46a5495

Output of above command should be something like this,

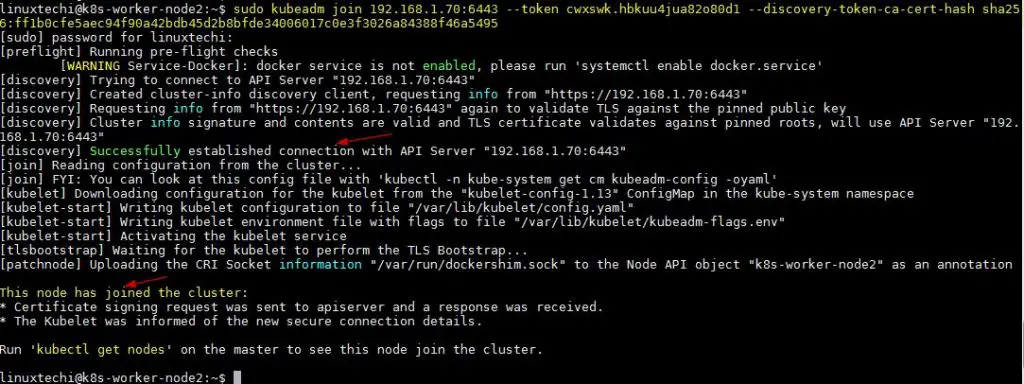

Similarly run the same kubeadm join command on the second worker node,

linuxtechi@k8s-worker-node2:~$ sudo kubeadm join 192.168.1.70:6443 --token cwxswk.hbkuu4jua82o80d1 --discovery-token-ca-cert-hash sha256:ff1b0cfe5aec94f90a42bdb45d2b8bfde34006017c0e3f3026a84388f46a5495

Output of above should be something like below,

Now go to master node and run below command to check master and slave node status

linuxtechi@k8s-master:~$ kubectl get nodes NAME STATUS ROLES AGE VERSION k8s-master Ready master 100m v1.13.2 k8s-worker-node1 Ready <none> 10m v1.13.2 k8s-worker-node2 Ready <none> 4m6s v1.13.2 linuxtechi@k8s-master:~$

Above command confirm that we have successfully added our two worker nodes in the cluster and their state is Ready.This concludes that we have successfully installed and configured two node Kubernetes cluster on Ubuntu 18.04 systems.

Read More on: Deploy Pod, Replication Controller and Service in Kubernetes

Excellent, worked a charm!

Got some issues on Ubuntu 18.04 / docker 18.06 / kubeadm 1.13 with flannel pod not starting on worker nodes.

“Error registering network: failed to acquire lease: node “” pod cidr not assigned”

The solution was as per ‘https://github.com/coreos/flannel/blob/fd8c28917f338a30b27534512292cd5037696634/Documentation/troubleshooting.md#kubernetes-specific’ to path the nodes with the podCidr:

kubectl patch node -p ‘{“spec”:{“podCIDR”:””}}’

Maybe it’ll save some time for someone.

Thanks for your work.

Issue in ubuntu 18.04 : When machine is rebooted, kubernetes is not started automatically and kubeadm has to be reset and worker has to join again.

Thank you using the article i was able to deploy k8 cluster in few minutes runing the master and worker nodes as vm under VirtualBox

If you’re getting kubectl get nodes status ‘NotReady’, its because flannel is broken(?)

Use cylium instead with this command:

kubectl apply -f ‘https://raw.githubusercontent.com/cilium/cilium/master/examples/kubernetes/1.14/cilium.yaml’

nice … thanks!

root@k8s-worker-node1:~# sudo kubeadm join 10.0.2.15:6443 –token q2r9un.l7bapq1m18wtd9ij \

> –discovery-token-ca-cert-hash sha256:72e5b3bcf18ae48d107da8a393b70b4896ca5abb8ec6609da98b4710b080ec55

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected “cgroupfs” as the Docker cgroup driver. The recommended driver is “systemd”. Please follow the guide at ‘https://kubernetes.io/docs/setup/cri/’

Note : While executing in slave node not going forward stuck there. Kindly anyone knows this problem?

1) check that you can run on the master “kubectl get nodes” if not because port6443 is closed, then run “strace -eopenat kubectl version” and retry

2) if you can no more ping the master, run “sudo ip link set cni0 down”

With this both command , I was then able to join the master from a slave.

please do kubeadm reset on worker node.

That should basically solve since the config was already present in the machine

I got following error.

[init] Using Kubernetes version: v1.15.1

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected “cgroupfs” as the Docker cgroup driver. The recommended driver is “systemd”. Please follow the guide at ‘https://kubernetes.io/docs/setup/cri/’

error execution phase preflight: [preflight] Some fatal errors occurred:

[ERROR Port-10251]: Port 10251 is in use

[ERROR Port-10252]: Port 10252 is in use

[ERROR Port-10250]: Port 10250 is in use

[ERROR Port-2380]: Port 2380 is in use

[preflight] If you know what you are doing, you can make a check non-fatal with `–ignore-preflight-errors=…`

Worked for me. Step by step explained. Thanks

The ‘Not ready’ status on slave nodes, in my case, was due to a missing package in the nodes. Solved with apt-get install apt-transport-https. Then removed the nodes from the cluster (in master node), and joined the nodes to the cluster again from the slave nodes. A minute later, all nodes were ‘Ready’